FEATURED

Building the Decision Stack

Establishing the data foundation enterprise decisions depend on.

Platform Architecture · Systems of Record · Enterprise Scale

Building Systems That Scale Decisions

The Three Layers of Durable Scale

Complex platforms don’t fail from missing features — they fail from missing structure. My work focuses on building that structure.

Each case study reflects a distinct layer of that architecture:

Durable foundations

Governed intelligence

Disciplined ecosystem scale

Together, they show how I turn enterprise signals into decisive, scalable outcomes.

Designing Trustworthy AI

Engineering AI systems that increase velocity while preserving trust.

AI Governance · Risk Architecture · Privacy Controls · Executive Impact

Governing a Marketplace Ecosystem

Architecting marketplace systems where incentives and execution reinforce each other.

Marketplace Strategy · Roadmap Governance · Operational Scale

CASE STUDY 1

FOUNDATION

Building the Decision Stack

Architecting the foundational layer that enabled enterprise-scale decision velocity.

Platform Architecture · Systems of Record · Enterprise Scale

The Environment

As enterprise security complexity increased — from AI-driven threats to acquisitions and resource constraints — noise grew and decision-making slowed. Key environmental considerations:

Growing enterprise complexity

Fragmented asset visibility

Increasing vulnerability volume

Manual prioritization workflows

II. Standardization Enables Scale

Risk normalization embedded into core workflows.The Problem

The constraint wasn’t signal volume — it was architectural fragmentation.

Without a canonical system of record, risk signals-were fragmented and workflows couldn’t scale. Automation would have amplified noise instead of improving clarity. The Architecture

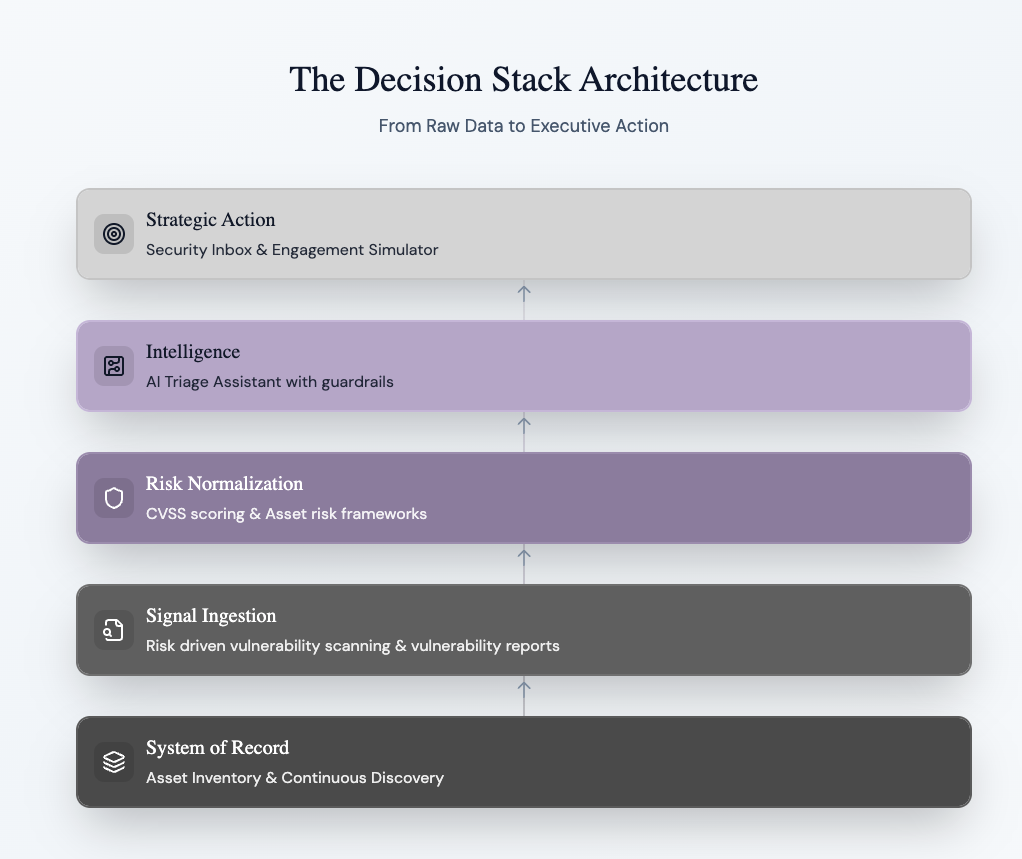

The Decision Stack | Raw Data -> Executive Action Velocity

I. Foundation Before Acceleration

Canonical asset models before automation.III. Workflow Before AI

Optimized for human cognition before acceleration.IV. Automation as a Final Layer

AI introduced only once governance and inputs were durable.The Tradeoffs

This was a live system — not a greenfield rebuild.

Prioritized canonical data models over feature velocity

Embedded risk normalization before introducing automation

Delayed AI deployment until governance was durable

Optimized for long-term scalability over short-term polish

This sequencing reduced systemic rework and increased enterprise trust.

GTM & Rapid Iterations

Utilizing a diverse group of customers (enterprise to mid-market) for Early Adoption, we were able create accelerate feedback loops to iterative rapidly our refinement of the architecture, workflows, and features for our market launch.

From early adoption, customers were able to:

Mapping attack surfaces continuously

Embedding normalized risk scoring into workflows

Accelerating triage without increasing noise

Enabling AI workflows on clean foundations

Most importantly: We didn’t launch features. We launched a decision system.

Impact

>Continuous visibility across 30K+ enterprise assets

>Automated risk normalization replacing manual prioritization

>One-click asset assignment for scalable testing workflows

>Increased enterprise adoption prior to full GTM

Published Materials

Foundation

Signal Ingestion

Risk Normalization

Intelligence

Strategic Actions

Automation does not create clarity — it amplifies the architecture beneath it.

Without a canonical foundation, AI increases entropy.

With durable systems of record, automation becomes a force multiplier — accelerating decisions while preserving trust.

CASE STUDY 2

AUTOMATIONS

Designing Trustworthy AI

From Signal to Trusted Remediation Velocity

Designing human-in-the-loop AI that accelerates triage without compromising trust.

AI Governance · Risk Architecture · Privacy Controls · Executive Impact

The Challenge

AI could accelerate prioritization — but in enterprise security, acceleration without governance amplifies risk.

Key considerations:

High vulnerability volume

Manual triage bottlenecks

Inconsistent severity decisions

Enterprise risk sensitivity

We needed trusted automation — not faster noise.

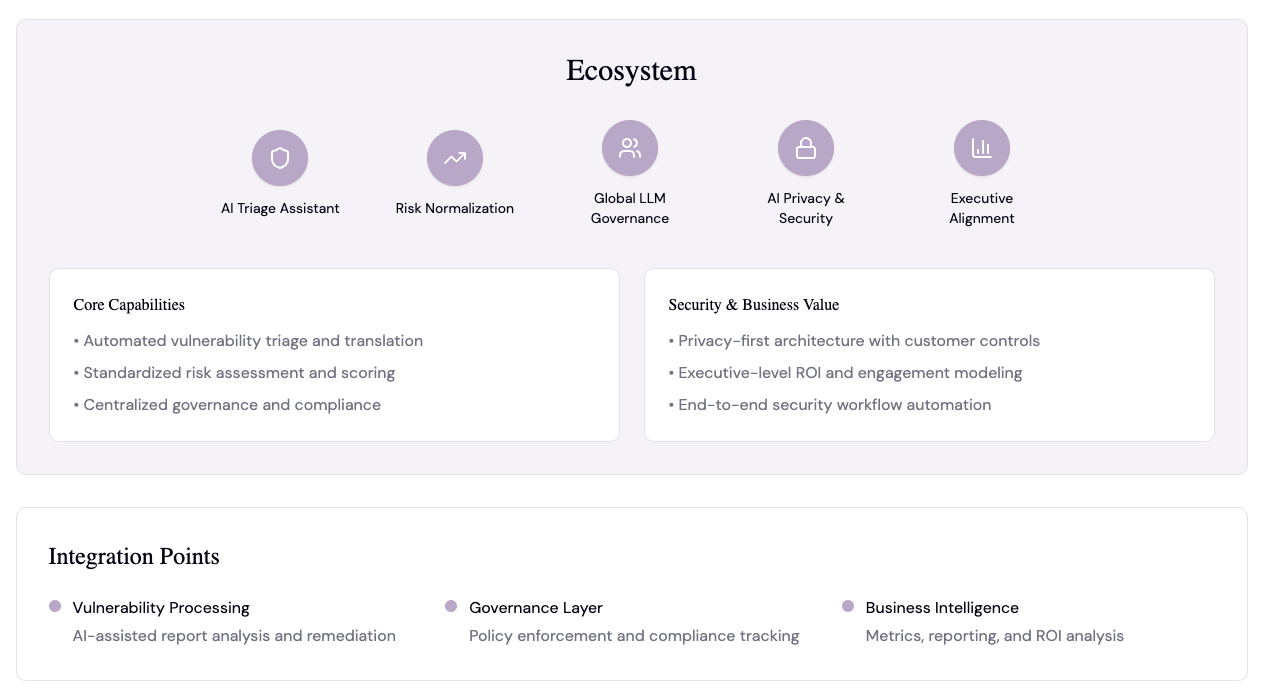

AI Architecture

As in the Decision Stack foundation, AI was layered only after risk normalization and governance were durable.

I. Standardize Before Acceleration

Embedded CVSS-to-VRT mapping before introducing AI.

II. Centralized LLM Governance

Organization-wide controls and guardrails.

Tradeoffs We Made

Limited AI scope to assistive triage

Integrated privacy controls

Sequenced governance before broad enablement

Responsible AI

This was not experimental AI.

It was structured, governed, and executive-aligned from day one.

III. Privacy by Default

Private architecture built into the core platform.

IV. Executive-Linked Outcomes

Modeled ROI to secure sustained executive alignment.

AI was introduced as an accelerator, not a replacement.

Enterprise Impact

The result was measurable operational leverage at enterprise scale:

70% manual triage workload reduction

200+ AI augmented Organizations

20% to 27% increase in adoption week of week during phased rollout

Published Materials

CASE STUDY 3

MARKETPLACES

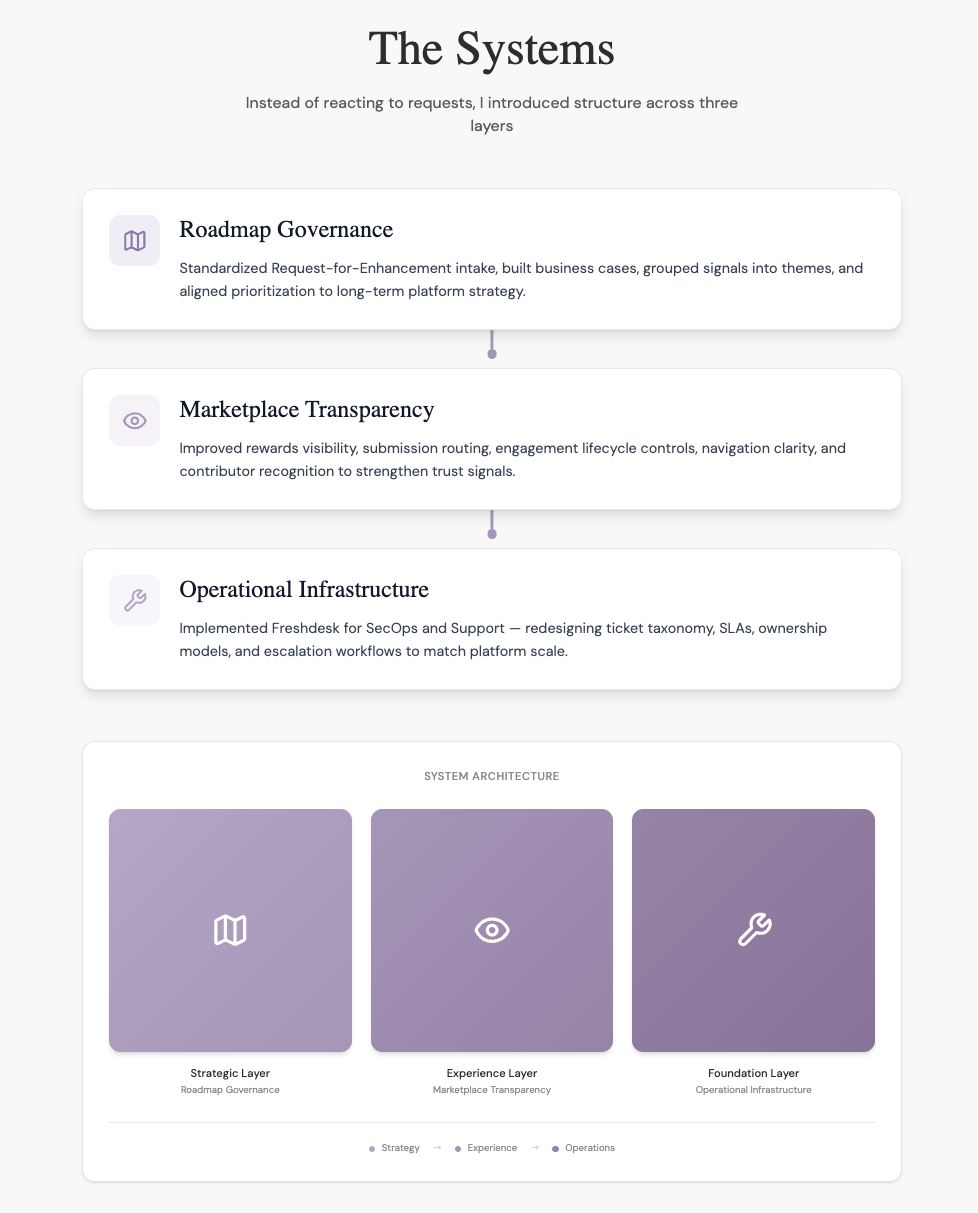

Governing a Marketplace Ecosystem

Scaling a multi-sided security marketplace through governance, roadmap discipline, and operational infrastructure - not feature sprawl.

Marketplace Strategy · Roadmap Governance · Operational Scale

The Scaling Tension

As enterprise adoption grew, complexity accelerated:

Competing enterprise, researcher, and internal feature requests

Researcher transparency expectations

Strained SecOps, Escalation, & Support workflows

Roadmap capacity limits

The challenge wasn’t growth. It was governance at scale.

Strategic Decisions

Prioritize platform integrity over customization

Standardized before scaling

Balanced enterprise demands with marketplace equity

Invested in internal systems to prevent downstream friction

Scaled Marketplace Participation

Launched two incentive programs driving 27% year-over-year submission growth.Outcomes

The result was a healthier, more durable marketplace flywheel:

Expanded Contributor Pool

Increased researchers qualified for private programs by 25% annually.Improved Enterprise Experience

Established and operationalized a customer experience program, delivering a 10+ point quarterly CSAT increase.These structural shifts strengthened both sides of the marketplace — improving signal quality, contributor participation, and enterprise trust simultaneously.